Why faster coding with Copilot slows down delivery without an AI SDLC

Adopting GitHub Copilot and similar assistants has become the industry standard. Market data substantiates the trend: by early 2025, over 15 million developers were utilising GitHub Copilot, representing a fourfold increase in just 12 months. Consequently, organisations anticipate a significant enhancement in performance and a rapid Return on Investment (ROI) across entire projects.

Increased productivity at the individual developer level primarily consists of accelerated code generation. Such local efficiency does not automatically translate into improved velocity for the entire team.

The fault rests not with the tools themselves, but in the absence of a comprehensive implementation methodology. Deploying AI without a systemic framework invites operational chaos. It accelerates the accumulation of technical debt and often creates new process bottlenecks.

Research from the MIT Media Lab indicates that currently, a mere 5 per cent of AI projects deliver tangible business value. The situation is critical. Identifying the primary cause of the “productivity paradox” and determining how to achieve genuine acceleration is therefore essential.

Key information

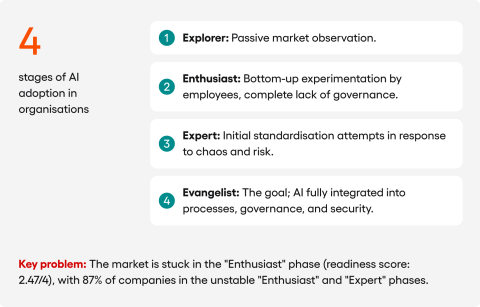

- Companies remain trapped in the “Enthusiast” phase (average readiness score is just 2.47/4), characterised by bottom-up AI adoption without strategic governance.

- AI tools accelerate code generation (by up to 55%), but create a systemic bottleneck by overburdening senior developers during Code Review.

- Generative AI introduces complex new categories of technical debt, such as “Prompt Drift” and “Explainability Debt”.

- Transitioning from unstructured adoption to a structured methodology allows organisations to reduce task completion times by 3-5x and decrease production errors by 30-50%.

The diagnosis: why AI generates chaos rather than profit

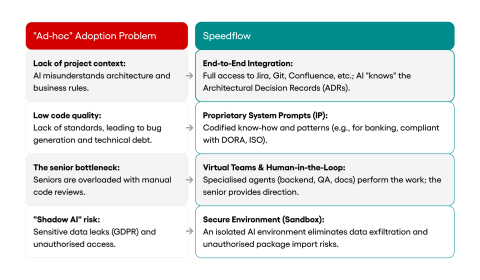

Before discussing solutions, we must precisely diagnose why bottom-up, uncontrolled AI adoption proves ineffective and risky.

The ROI gap: a lack of evidence

We must address the fundamental discrepancy between market promises and the actual Return on Investment (ROI). While expectations are high, verifiable data from successful, completed deployments remains scarce.

Many stakeholders view such a “gap” as a simple metric failure, where they expect 15% efficiency gains but achieve only 5%. Analysts at MIT Sloan, however, argue the issue is far more structural. The industry anticipates profits while most organisations (outside the technology giants) are still incurring costs on prolonged experiments and pilots.

Consequently, the discourse has shifted. Discussion now focuses less on “realised ROI” and more on “implementation friction” and the high “failure rate of pilot projects”. Far too many initiatives are abandoned before their value can be calculated.

Operational chaos: pressure for implementation without governance

Such chaos is exacerbated by immense executive pressure. The EY CEO Outlook Pulse Survey 2024 reveals that 76% of CEOs demand that AI translate into immediate operational improvements. These demands drive reckless implementation, as teams rush to demonstrate activity rather than results.

The AI Adoption Index 2.0 categorises the maturity crisis into four distinct stages: Explorer, Enthusiast, Expert and Evangelist.

With a market readiness score of just 2.47/4, the average company remains firmly stuck in the “Enthusiast” phase. Furthermore, 87% of enterprises sit in the unstable territory between “Enthusiast” and “Expert.” They possess adoption but lack control.

McKinsey defines the deficiency as the “governance gap.” An ad-hoc approach inherently bypasses essential safeguards, such as data tagging protocols, access controls, and IP protection. It actively generates risk.

New categories of technical debt

Research confirms that GenAI introduces new forms of technical debt invisible to standard static analysis tools.

- Prompt Related Technical Debt: Business logic migrates from version-controlled code into unstructured prompts. A subtle change in a prompt (“Prompt Drift”) can silently disrupt functionality.

- Model & Data Debt: Systems become fragile. An external API update (“Model Drift”) can break an application even if the internal code remains untouched.

- Explainability Debt: We are creating “black box” systems. If an AI makes an erroneous decision in a financial or medical context and the logic cannot be audited, the organisation faces significant legal and operational liability.

The senior developer bottleneck

Here we reach the core paradox. Accelerating junior developers has created a significant bottleneck at the senior level.

The senior workload has not decreased. It has fundamentally shifted. Seniors are no longer writing code. They are reviewing large volumes of AI-generated output. While AI filters simple syntax errors, it struggles with architectural coherence and complex business logic.

Seniors must now verify code they did not write. They must validate it against architectural standards and check for subtle logical flaws. Instead of architecting solutions, your most valuable resources act as “human debuggers.” Even if GitHub reports a 55% increase in coding speed, the workflow stalls. As analysts note, writing code is rarely the primary bottleneck in enterprise software. The constraint has simply moved and become more difficult to resolve.

Growing legal risk: “Shadow AI” vs GDPR and DORA

Security represents the final, non-negotiable hurdle. Uncontrolled usage, often termed “Shadow AI”, is not a theoretical risk. It is an active threat generating real costs.

99% of organisations possess sensitive data (e.g., source code, customer data) exposed to unauthorised AI access, according to Varonis.

20% of firms have already reported a security breach caused directly by “Shadow AI”, with incidents costing an average of 670,000 dollars.

Standards bodies such as OWASP have released a new Top 10 list specifically for LLMs. It cites vectors like “Prompt Injection” and “Sensitive Information Disclosure” as critical GDPR threats.

The financial sector is particularly exposed. Under DORA regulations, banks face strict resilience requirements. Yet 80% of CISOs admit to Bright Defense that AI allows attackers to develop new vectors faster than defences can adapt.

Our response: investment in R&D as a strategic requirement

As a technology partner, we do not bill for “using AI”, but for concrete outcomes. These outcomes are the delivery of enterprise-grade software within established timeframes.

We recognised early on that chaotic AI adoption is a strategic dead end. It generates debt, risk, and costs that ultimately burden the client and retard long-term project progress.

We faced a binary choice. Either accept the limitations of off-the-shelf tools or invest resources into building a proprietary methodology that resolves the diagnosed issues regarding context, quality, and risk.

Building the framework required addressing actual R&D challenges. We had to determine how to provide AI with full project context, structure expert knowledge into a repeatable process, and ensure absolute data security.

Speedflow: a methodology derived from practice

Speedflow is not merely a plugin. It is a proprietary methodology and framework from Speednet that integrates AI directly into the Software Development Life Cycle (SDLC). The system addresses the specific failure points identified in the first section.

How do we solve the context problem? Through end-to-end integration

Consider the practical difference. Let us analyse a typical task, such as “Add a new payment method.”

- The “Chaos” Approach: A developer requests code from Copilot. It generates a function. The developer then spends hours manually checking Confluence for validation standards and verifying dependencies. The code reaches review and is rejected for breaching an architectural decision (ADR). Time is lost.

- The Speedflow Approach: Our system ensures the AI agent automatically receives the relevant ADRs and validation standards from Confluence alongside the Jira ticket. It generates code that is architecturally compliant from the outset. The senior reviews business logic rather than boilerplate compliance.

How do we solve the quality problem? Through proprietary system prompts (IP)

Speedflow relies on our unique Intellectual Property. We have codified years of banking and fintech experience into a library of system prompts. These ensure that every line of code generated is secure, auditable, and compliant with regulations such as DORA and GDPR. We do not rely on generic training data. We utilise patterns tested in demanding enterprise environments.

How do we solve the risk problem? Through a secure environment and independence

We treat “Shadow AI” as a critical threat. All AI operations occur within a secure, isolated “Sandbox” environment, preventing uncontrolled data leakage. Furthermore, our framework is model-agnostic (compatible with Claude, GPT, Gemini), eliminating the risk of vendor lock-in and ensuring business continuity regardless of a single provider’s pricing policy.

Result: what this means for clients

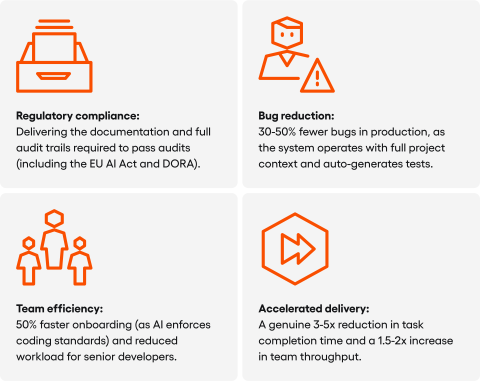

Investing in Speedflow was a means to an end: fulfilling the promise of creating enterprise software faster, cheaper, and with superior quality. Our methodology converts chaos into measurable results.

Compliance: We mitigate regulatory risk by providing complete, automated audit trails for every interaction. This ensures your development process remains transparent and fully aligned with stringent frameworks like DORA and the AI Act, turning compliance into a seamless background process rather than a manual hurdle.

Efficiency: Operational efficiency improves as we directly tackle the bottleneck of senior developer overload. By acting as an active, context-aware knowledge base, the AI relieves seniors of repetitive guidance, which in practice reduces onboarding time for new hires by 50%.

Quality: We observe 30-50% fewer errors reaching production because the system operates with full awareness of your project’s architecture. Instead of just writing code, it automatically generates comprehensive test suites based on the full context, catching defects at the moment of creation.

Velocity These factors combine to unlock genuine delivery speed. Rather than just writing lines of code faster, our methodology allows teams to complete entire tasks 3-5 times faster, ultimately achieving 1.5-2 times higher throughput for the department as a whole.

Conclusions: the tool is not the strategy

Copilot is a tool, not a strategy. Relying solely on it leads to a situation where the overall process may slow down, even though code production accelerates. Companies focus on optimising individual tasks, resulting in faster coding. However, this local gain does not translate into systemic efficiency.

The data we analysed unequivocally supports such a conclusion. Gains from faster typing are immediately negated by three bottlenecks: the code review queue, complex debugging of new forms of technical debt like “Prompt Drift”, and the remediation costs of security breaches and regulatory non-compliance.

The “Enthusiast” phase of adoption is a blind alley. Real competitive advantage does not stem from purchasing licences. It derives from the capability to implement AI systemically.

When choosing a software partner, one must ask a critical question. Does their AI strategy conclude at purchasing Copilot, or is it grounded in a proven SDLC framework that guarantees acceleration, quality, and compliance?

This blog post was created by our team of experts specialising in AI Governance, Web Development, Mobile Development, Technical Consultancy, and Digital Product Design. Our goal is to provide educational value and insights without marketing intent.